Legal and Ethical Risks of AI-Driven Solutions in Project Delivery

Learn how to use AI-driven solutions responsibly in project delivery. Discover how to enhance delivery assurance, uncover hidden risks, and stay compliant with Canadian privacy and employment laws.

AI IN PROJECT MANAGEMENT

Grant DeCecco

6/24/20254 min read

AI Ethics in the PM Office: How to Stay Compliant While Delivering Smarter

Disclaimer: This article is for educational purposes only and does not constitute legal advice. If you're making decisions involving AI, data privacy, or employment law, consult a qualified legal professional.

You’re Already Using AI ... But Is It Compliant?

If you’re leading a project or IT function, you’re likely experimenting with AI-driven solutions—tools that summarize meetings, forecast timelines, or even recommend resource allocations.

That’s a smart move. These tools can enhance delivery assurance, uncover hidden risks, and even intelligently coach project teams to perform better.

But even basic AI use may trigger obligations under privacy and employment law. Once AI starts influencing project outcomes or touching HR data, you may be operating in a regulated space.

This blog gives you a practical overview of the legal and ethical risks of AI use in project environments, with a focus on compliance, fairness, and risk management.

What Counts as “AI” in a Project Context?

Not all automation is AI, but the line is blurry. Here’s how to tell:

If your tool uses historical data, makes predictions, or offers suggestions based on patterns, not just rule, it likely falls under machine learning or generative AI. And that carries risk.

Examples in the PMO:

AI-powered forecasting tools that predict delivery delays

Resume screening software that prioritizes candidates

Chatbots answering employee queries with project policies

Tools that suggest resourcing decisions based on performance data

If any of these influence decisions about people, money, or scope—they need oversight and disclosure.

The Legal and Ethical Framework (Canadian Focus)

Canada doesn’t yet have a unified AI law, but there are laws that affect how you use AI in practice. Here's what you need to know:

1. Privacy and Consent

Federal: PIPEDA governs private-sector data use across Canada.

Provincial: BC, Alberta, and Quebec have additional laws. Quebec’s Law 25 is the most stringent and requires:

Privacy Impact Assessments (PIAs) if data leaves the province

Default confidentiality settings

Disclosure of automated decision-making

If you’re inputting employee data into an AI tool—even for internal planning—you may be triggering legal obligations.

2. Bias and Fairness

AI trained on historical project or hiring data may carry forward old patterns—like prioritizing certain job titles or underrepresenting certain roles.

If your tool unintentionally disadvantages a group, that’s still a violation under Canadian human rights law—and courts are unlikely to accept “the AI made the decision” as a defense.

3. Transparency and Disclosure

In Ontario, Bill 149 will require employers to disclose the use of AI in job postings by January 1, 2026. Even before it becomes law, it’s a best practice.

If AI tools are used in hiring, performance evaluation, or promotion decisions, candidates and employees should be made aware, clearly and in writing.

4. Accountability

Your PMO or IT function is liable for the output of any AI tools it uses.

Recent cases in Canada show that even when the AI gets it wrong, like the Air Canada chatbot hallucinating refund policies, the organization remains accountable. The same applies to internal tools that affect people, budgets, or project outcomes.

Common AI-Driven Solutions in the PMO—And Their Compliance Risks

Use this table to audit your current AI-driven solutions and their potential exposures:

Use Case Risk Area AI-Driven Impact Key Questions Project forecasting tools Accuracy, data quality Enhance delivery assurance Is the model based on relevant, up-to-date data? Resource allocation engines Bias, fairness, transparency Uncover hidden risks in team capacity Can we explain how assignments were determined? Talent development recommendations Profiling, consent Intelligently coach project teams Are career paths equitably recommended? Resume screening or ranking tools Discrimination, privacy Reduce bias or amplify it Is AI use disclosed in hiring processes?

If you can't answer these questions confidently, it may be time to slow down before scaling up.

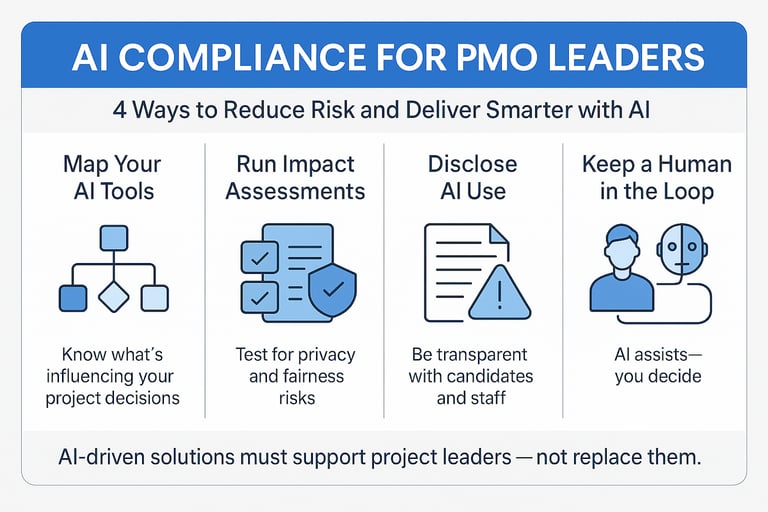

Practical Steps to Reduce AI Risk in the PMO

Start with a few core habits:

Map your AI exposure

Make a list of tools in use that generate or automate decision making, especially those touching personal or project critical data.Run a Privacy and Risk Impact Assessment

Especially if using tools with HR or delivery data. Quebec mandates this. For others, it’s a smart defense.Vet vendors carefully

Ensure contracts include:Data ownership clauses

Error liability terms

Audit rights

Create an internal AI policy

Limit what tools staff can use (especially public ones like ChatGPT). Set expectations around responsible use and human oversight.Disclose, even if not required yet

Candidates, team members, and stakeholders deserve to know when AI is involved. Transparency builds trust and resilience.

AI Compliance Readiness: A Self Assessment for PMOs

Answer yes or no:

Data & Privacy

Do you know what employee or project data your AI tools use?

Is any of that data stored or processed outside of Canada?

Have you conducted a privacy or risk impact assessment?

Fairness & Oversight

Are AI decisions reviewable and correctable by a human?

Have you tested for bias in the tool’s outcomes?

Are your teams trained to question AI-generated outputs?

Transparency & Policy

Do you disclose when AI is used in hiring or evaluation?

Do you have an AI policy governing staff use?

Are contracts with vendors clear on IP and liability?

✅ If you answered yes to 8 or more, you’re likely on track.

❌ Fewer than 5? It’s time to review your approach before AI exposure becomes legal exposure.

Final Thought: AI Should Support, Not Supplant, Project Leaders

The goal isn’t to replace human judgment, it’s to enhance delivery assurance, flag blind spots early, and intelligently coach project teams toward better results.

With the right governance, AI-driven solutions can become a trusted partner in every phase of your project lifecycle, from scope planning to retrospectives.